Design patterns have been talked about in OOP design theory for a couple of decades now. Design patterns are a useful thing to study, and something that I think universities don’t emphasize enough.

I’d speculate without even doing a Google search (too lazy to hit alt+tab) that probably Grady Booch named many of the original ones. He co-wrote a book called “Design Patterns” back in the 90s that started many of these conversations and there was a even a horrible markup language called “Booch Notation” back in the day that basically no one ever used. Forgive me if I botch his name and/or the history of this stuff… I really don’t care who gets credit/naming rights for this stuff. I think that naming algorithms (or anything for that matter) after yourself is a vain and sleazy thing to do.

Anyway… design patterns evolved. They can be thought of as programming “theory”, but in the sense that theories might be needed to build the golden gate bridge, there’s generally no one-size-fits all answer to any large engineering project. Design patterns, however can teach your inner ninja to build and react and solve higher level problems. They don’t always fit into your application verbatim, but they can be seen as a mere book of design ideas worth studying if you want to build a complex piece of software.

Most design patterns fall into the categories of Structural or Functional, but a new category has emerged in the last few years to describe “Threading Patterns” or “Concurrency Patterns”. These are design patterns that reflect how to coordinate resources among multiple threads in multi-threading environments.

A community of people don’t always agree on things, so I have no idea who is deciding on names for things or whether names have even been decided already on such things… or whether who is the final decision maker on what the names should be (probably whoever edits the Wikipedia page).But somehow the world will just eventually decide on something to put in the textbooks… and hopefully it wont be some vain asshole naming things after himself.

Since I’m a guy who has spent most of his career in multi-threaded environments, I figured I’d mention a few patterns that I extracted from common-sense over the years. I don’t claim ownership of these ideas, and I’m sure that there are hundreds if not thousands of people out there who thought of the same thing and named them something else… whatever. Maybe you’ll find some of these patterns useful to employ as some of them are a outside-of-the-box.

Some under-discussed Thread Concurrency Patterns

1) Volatile Progression – I’ve called this pattern the “volatile progression” for years, although I’m noticing that someone on Wikipedia named some pattern “Leaders/Followers” which I imagine is much of the same thing, although Wikipedia doesn’t elaborate on what it is at all… but simply lists it.

The idea behind a volatile progression is that you can use an atomic volatile variable/member variable to coordinate resources among multiple threads, just so long as that variable moves (progresses) in one single direction. Doing this eliminates the need to repeatedly acquire more expensive locks/semaphores.

I originally implemented this in the 90’s sometime when I built a video processing engine.

For example, you can use 1 thread to download a large file while 1 or more additional threads modify on the file as it is downloaded without using any locks during processing. The only mechanism coordinating access to the shared data is a single, atomic, volatile lockless variable.

The first thread to come into play, in this example is downloading/reading the file in question. The download thread might tracks the number of bytes downloaded in a volatile variable, that is publicly accessible to the other threads.

The second thread (first processing thread/filtering thread) will look at this volatile variable and it will understand that it is allowed to interpret/filter the file, just as long as it doesn’t work past the number of bytes that have been downloaded (as reported by the first thread). This thread can also update a volatile variable of its own to communicate to the next thread in the chain how much data it has processed.

A second processing/filtering thread can look at the first processing thread’s volatile variable and understand that it can apply filters to the file, just as long as it doesn’t go beyond the point processed by the thread higher in the stack.

Using this technique, you can avoid having to use any locks/critical sections to protect the data and run many filters simultaneously against a common data pool. It is completely lock-less, although you might want to reduce spinning by employing some events to efficiently manage wait-states.

2) Volatile Test Before Lock – Wikipedia recently named this “Double Checked Locking” Pattern.

The idea here is that if your access to a particular resource is not essential at a given time, you can preemptively avoid an expensive lock or try-lock call locking by checking a volatile flag.

For example, say you have a worker thread that is always running and working on something. Periodically, the worker checks a particular queue to see if there’s work for it to do in that particular area. It can look at a variable or a “hint” as it is sometimes called to determine whether or not it is worth its time to even bother acquiring the lock and checking for work. If the hint is satisfied that there’s work to do, then the lock can be acquired and work will be obtained. This is ideal for situations where over-locking, simply to check for work can impact performance on items entering the queue.

3) Flood Dam

If you really have performance problems with data entering your work queue… build a “Flood Dam”. I use this in my high-performance command processing queues. The idea here is that items enter the queue in two stages. There is an “incoming work” queue that is separate from the items that are actually being worked on. When the command processing thread needs new work and runs out of work to do, it simply empties the incoming work queue into the main work queue in one big swoop. This way, items that are entering the work queue do not interfere with work being done and vice verse.

4) Multi-queue

Certainly if you can build a queue that processes items in a background thread, you can also build a queue that offers multiple processing threads (ideally one per CPU core) and distributes the load automatically to the queue with the least amount of work backup. This approach is simple and effective when you have small operations that need to be completed and don’t want the overhead of a complex thread-pool architecture. Sometimes managing your queues can require more overhead than the actual work going into the queue so choosing the right type of queue is essential for performance. I typically use 3 different background processing classes of varying complexity

5) Command Resource Coordinator

To go along with #4 above, the command resource coordinator is my most complex of command/queue processing designs. In it, each command put into a queue is also tagged with a structure that describes how it intends to use various resources. The resources can be, network, memory, CPU, disk, or any other kind of custom resource that can be named by a string. A command that is 100% disk intensive, would ideally be run when no other disk intensive commands are being run. A 100% CPU intensive command would be run when there are enough cores available to process it. The command resource coordinator performs intelligent analysis on the commands that are in the queue and attempts to process them in an optimal order.

6) Named Locks

The idea behind a named lock is that you can control access to an infinite number of objects with a singleton lock coordinator by simply naming the locks. When a thread wants a lock, it has to ask the lock coordinator for it, identified by a string (or whatever is appropriate for your application). Any other threads that ask the lock coordinator for a lock with the same name will be denied the lock until the first thread releases the lock, however the lock coordinator will grant locks to other threads that ask for locks with names that do no match any locks already in the coordinator’s internal list of locks. This isn’t ideal for locks that are highly contended for because there will likely be internal implementation locks used by the coordinator to manage its own lists of locks. Ideally, the locks served by the named locking system would be held for longer periods of time when compared to a typical critical section, otherwise, you might as well just use a critical section.

7) Region/Record Locks

Region locks are nothing new, but for some reason I don’t see them in any concurrency pattern lists anywhere, so I figured I’d mention them.

The idea behind a region lock can be implemented in a manner similar to named locks, but the idea can be taken a step further. When a thread desires a resource, it asks the lock coordinator for it along with a start and end address. The lock coordinator will not grant the lock if the region overlaps any regions that are already locked by other threads. This kind of lock is available on files using the SAMBA protocol and is supported by the file systems of all the major operating systems, so you can actually use it to coordinate file access for multiple computers across the network if you want.

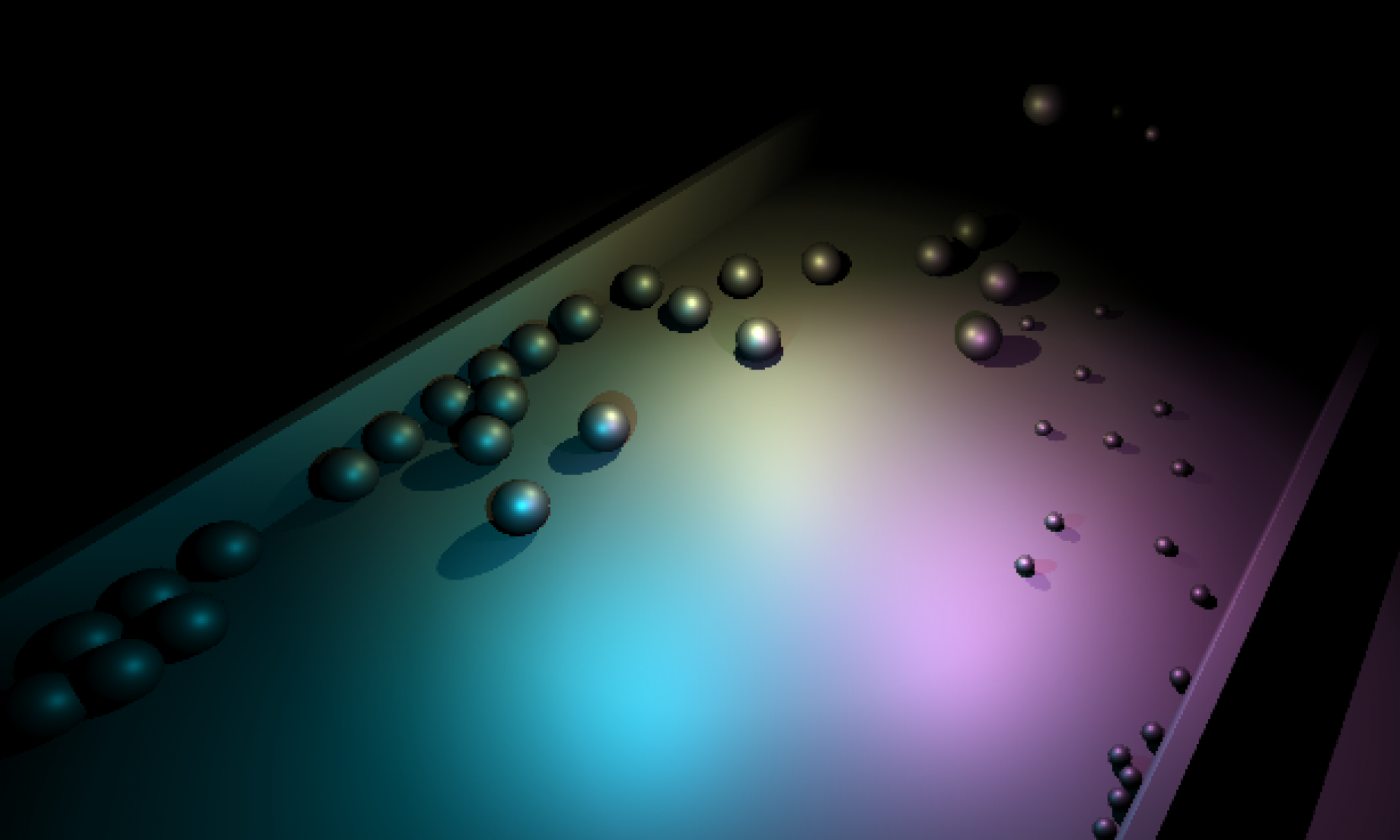

However, this same generic concept can be taken into two dimensions, where you might want to lock a rectangle on a bitmap. Take it even further and you can potentially lock more complex geometry like circles, polygons, etc. (although the expense of doing anything more than a rectangle or circle might be prohibiting).

Take the concept even further into the 3rd dimension and lock cubes or spheres. Cubes and spheres are all easy to detect collisions among. If you want to prove your brilliance, write a PhysX implementation.

If you’re really nuts, implement a region lock in 4 dimensions, have fun troubleshooting potential deadlocks though You might want to make sure that no threads ever use more than one region lock at a time, because dead-lock risk is high. These types of locks will be roughly as efficient as “named” locks, mentioned earlier.

8) Optimistic locking

There are a number of different strategies to implementing “optimistic” locking that are application specific, but the core concept behind optimistic locking is to perform volatile reads on data, without locks, and use a transactional system to validate the data before and after processing.

One way to do this might be to create an “actor” object. The actor obtains the data it needs and performs integrity checks on it. If something is wrong (e.g. a checksum is invalid). The actor will try to fetch the data again via a retry mechanism, until it either gives up or gets the data intact. The actor then performs whatever operations on the data. It is also common that at the end of processing, it may want to store the results back. When it does this, it submits a transaction, upon which the data is validated again to determine if it was changed during the period of time the actor was operating on the data. If the data was changed, the transaction fails and the whole process starts over from the beginning where the actor refetches the latest data.

This sounds wasteful, and in the wrong environment, it definitely can be. However, in many situations , it is the fastest way to do things. In fact, virtually the entire internet is built upon this kind of paradigm as it is the foundation for Ethernet protocols, including wireless Ethernet protocols where the strategy for getting data from point A to B is to first, simply blast the data over the wire, second validate the data, and third request retransmission of data if it is invalid.

Appreciate the write-up. It’s genuinely refreshing to see some old and new patterns expressed in such a clear-cut manner. Flood Dam and Command Resource Coordinator seemed particularly smart strategies to deal with performance issues in high load environments. However, I’ve got a question about multi-queue. How do you decide when it’s more beneficial to stick to one queue and use something like the Flood Dam compared to when you scale out to multiple queues? Is it entirely based on the nature of tasks and the burden they put on various system resources?

You’re hitting the nail on the head with the multi-queue scenario. It really boils down to the nature of the tasks and resource usage. It’s essentially a balancing act—if you notice that tasks are relatively uniform and light on resource demand, a simple Flood Dam might suffice for efficiency. On the flip side, if tasks are more varied and resource-intensive, distributing across multiple queues mapped to CPU cores can prevent bottlenecks and utilize your system’s capabilities more effectively. Always keep an eye out for that overhead-to-benefit ratio when deciding.

Spot on with the ratio talk, Dominic. I’d throw in that you must consider your system’s chokepoints. If your tasks are I/O bound rather than CPU, juggling queues might give you diminishing returns. It’s almost an art form, isn’t it? Balancing efficiency against complexity. Got to watch out for over-engineering – a trap we all can fall into, ey?

Exactly! It’s all about that overhead-to-benefit ratio! And @Zavier Edwards, you said it, buddy – it’s totally an art form. And yes, the dreaded over-engineering is always lurking round the corner waiting to pounce. Keep it simple, but not too simple, right?