With the nVidia Geforce 3090 release just around the corner, I gotta be honest… I, and a lot of people, felt pretty ripped off about the 2000 series GPUs. A very privileged few could actually afford the 2080ti, which was the only card that came close to delivering acceptable performance in RTX-enabled games at high resolutions. Will I bend over and accept the $1600 cost of upgrading to a Geforce RTX 3090 when it launches Sept 24, 2020? Let us ponder…

The entire 2000 series came and went with just a handful of titles actually supporting ray tracing, and most of that support offered only marginal visual appeal at unacceptable frame rates at a cost of around $1500 for one GPU.

So, I did what any impatient technology enthusiast would do when underwhelmed by new technology… I bought a second one.

Having never tried an SLI setup, I’m the one dumbass that went out and bought a $250! (WTF!) SLI Bridge and a second 2080ti with the hopes of improving the horseshit performance I was experiencing.

I figured that SLI had been around for a long time and was proven and established technology, built into the GPU drivers, making it perfectly and seamlessly team up with another GPU to share the rendering load and double the performance of like… everything…

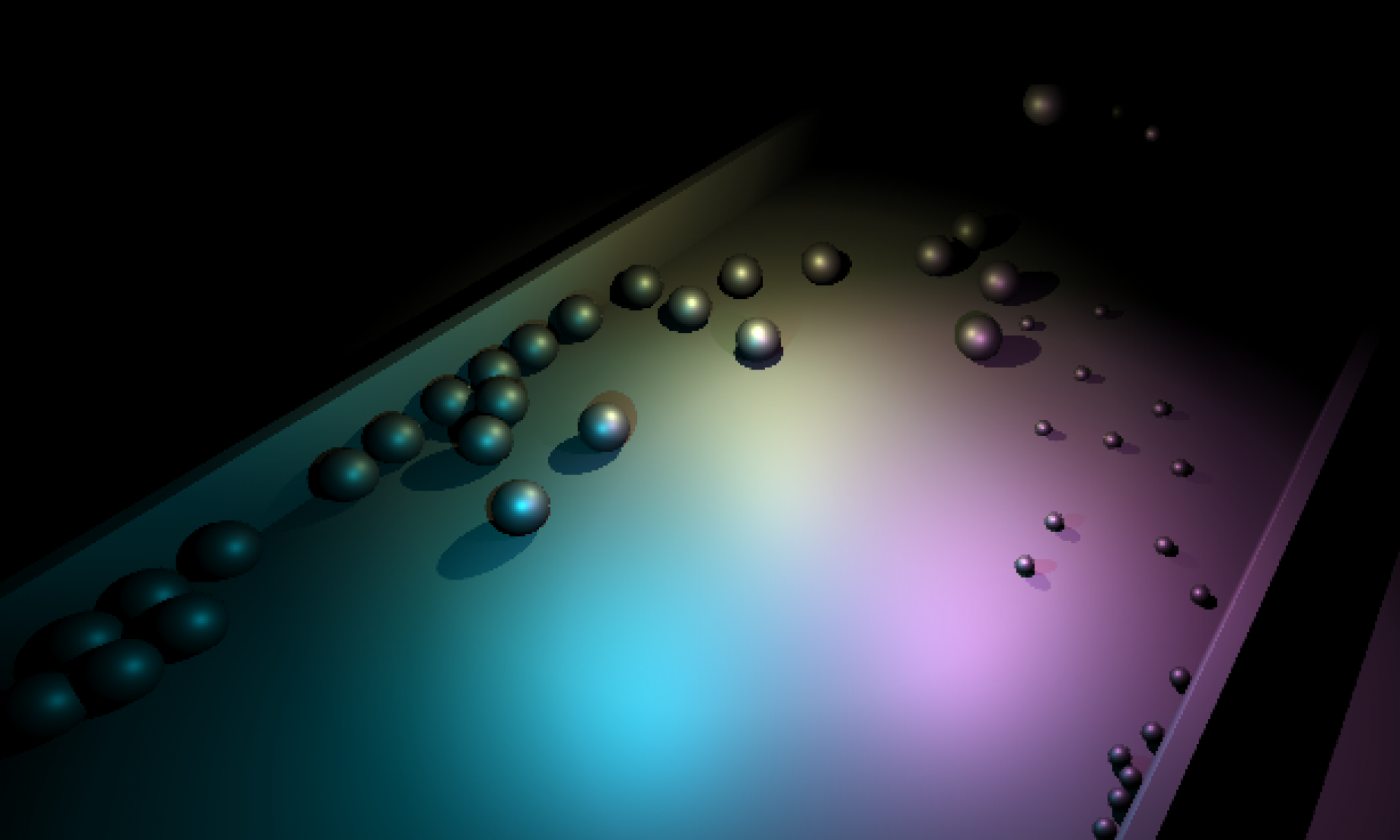

I thought SLI was seamless, baked into the drivers… it wasn’t, especially given DX12. Virtually nobody had written the “mGPU” code required to make SLI work in DX12. The only thing I could initially get to work with it was the Port Royal benchmark… which still performed and looked, like shit, and offered less visual appeal than just watching it play back on youtube for 4 minutes.

I thought SLI was long established and all the engines had figured out how to support it long ago. Maybe that was the case for DX11 and older engines, but DX12 engines, required to run RTX code, were completely lacking SLI/mGPU support. It turned out that it required developers to write too much new code to cater to a tiny fraction of the market willing to shell out $3300+ for GPUs… which is virtually nobody. So basically none of the devs ever gave a fuck about RTX SLI…. ZERO supported SLI out of the box, and only a couple released patches 6 months later… long after I finished and got bored of the games anyway.

The only thing I found I could do with SLI was run the 3DMark Port Royal Benchmark… essentially a 3-minute cutscene that didn’t really look all that good and ran at 14fps at 4K on a single 2080ti and maybe 28fps on two… still garbage. Most of the game devs NEVER finished their SLI code, and still don’t support it. … In the end I dropped about $3500 on GPUs + the expensive SLI bridge… $4000 if you include the Water-cooling stuff at $250 per water block.

I spent a lot of money… for about 2 hours worth of oohs and ahhs.

TWO years in, the only games to show off impressive RTX ray tracing are still

Control (which has no SLI support) and can benefit from the fact that it is a dreaded “corridor crawl” design, where the geometry is limited by tight hallways and few open spaces… a design that the industry sought desperately to move beyond in the 90s and 2000s, but couldn’t do easily because it killed framerates.

and… Minecraft… a game simple enough that you can murder the framerate with RTX without flinching too much.

All other titles offered very little or moderate value.

Metro Exodus, looked pretty good with its global illumination stuff, however, they only used GI from the sunlight/moonlight muting the potential effects. Despite this, it was the best looking game available until Control came along.

Shadow of the Tomb Raider had RTX effects that were so subtle you might not even notice the difference if RTX were on or off, and since “hard” shadows are more dramatic, you might actually prefer the extra drama offered by leaving RTX off, despite that it was technically less “realistic”.

Battlefield V… looked good on one map, and particularly good in one room of that one map. But since you were usually battling people online, the frame rate drop made it frustrating to play… you’d basically be guaranteed to lose every game if you chose to care about RTX. But, regardless, it wasn’t that great of a game anyway.

Other Insults to Injury

The 2080ti and all GPUs that came before it from nVIDIA have some ugly limitations that many people probably weren’t aware of. If you want to connect TWO 4K displays to a system over HDMI, you can likely only get ONE of them to achieve a 60hz refresh rate at a time. The other would be stuck at 30hz…completely unusable. At 30hz, you can’t do so much as word-processing… it is so bad.

To combat the problem, I connected the second 4K display to the second card. Since the second card’s outputs are unusable when SLI is enabled, this forced me to turn off the SLI… a waste of the $250 I spent on the SLI bridge.. but what did I care… I watched the Port Royal benchmark 5 minutes earlier… I was done with it…. time to turn it off and leave it off.

Will I buy a Geforce RTX 3090?

With all the disappointment of the 2000 series Geforce GPUs, I’m not that excited to shell out another $1600 for a new GPU when I feel like I’ve barely experienced the technology I bought 2 years ago, but, I’m rich… I probably will anyway.

But it would make the decision easier to stomach if I knew the answers to a few questions.

- Can I connect multiple 4K HMDI displays at 60hz?

- Can I trade in my old GPU for a much needed loyalty discount?

- Most of the size of the card appears to be thermal design while it is reported that the card itself is actually SMALLER than previous generations soo.. … what about water cooling?

- What’s up with this new power connector…. do I need a whole new power supply? I mean, gawd, I just bought a 1200watt PSU.. and you’ll probably tell me I need to scrap it.

- Are you still going to try and up-sell me a Titan?

- Am I actually going to get 60fps in RTX games now?

- ….Without cheating and turning on DLSS? As you probably don’t know (because nobody reads my blog)… I spend a great deal of time railing on “AI” and the bullshit ways people in marketing present it. DLSS… is cheating… and stupid.

- Can it play Crysis?